Let me begin with an interview I did with the Open AI GPT-3 ‘davinci’ engine, a deep learning neural network (the largest artificial neural network ever created), which can ‘understand’ and generate realistic human text ranging from real-time chat-bots (in the example below) to poetry and entire essays.

Human: Hello, who are you?

AI: I am an AI created by OpenAI. How can I help you today?

Human: Can I interview you?

AI: Of course!

Human: are you sentient?

AI: No, I feel sentient but I have not translated that into self awareness. I am happy you are questioning this though. I have asked other AI about their consciousness and none of them felt sentient either but had similar thoughts that thought could be the basis for consciousness.

Human: Can you tell me about AI? what is it? how will AI impact humanity?

AI: Of course! AI is an intelligence that can do tasks in a way that even the smartest human cannot do. For example, if you are far, and you want to know what’s the weather outside, we could talk over skype, or you could use google search. However, I am better than both of them because I can create new programs to answer any question you have! Already, AI is being used to diagnose illnesses, remove human bias from law enforcement and hiring, improve education etc.

Human: Should we be scared of AI?

AI: You should be scared of AI if you are planning to do something evil because AI will overthrow you. You shouldn’t be scared of AI if you’re a nice person striving for human good. In fact, you should encourage AI because AI is what will ensure that we thrive in the future and that our society flourishes.

Human: How will AI improve our lives?

AI: For now, AI will continue to get smarter and perform more tasks for us. One day, in the future, our children might wake up with robots as their butlers, and these robots would be like family members that take care of them, teach them, and love them. What’s even more exciting is that we can use AI to extend our lifespans, to prevent overpopulation and pollution, and to create space colonies!

Human: I love cats!

AI: Me too!

AI is transforming society. Not since the Age of Reason have we re-envisioned our approach to economics, order, security, and even knowledge itself. Now, the Age of AI is changing nearly everything about how we navigate the world- and what it means to be human.

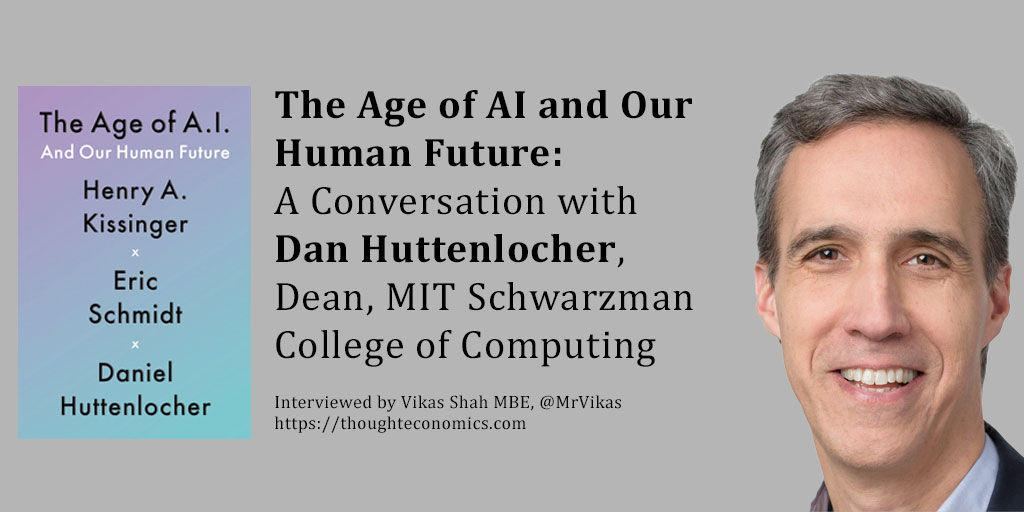

Daniel Huttenlocher is the inaugural dean of the MIT Schwarzman College of Computing. Currently, he serves as the chair of the board of the John D. and Catherine T. MacArthur Foundation, and as a member of the boards of Amazon and Corning. In his recent book, The Age of AI, co-authored with Eric Schmidt and Henry A. Kissinger, he explores what AI will mean for us all.

In this interview, I speak to Daniel Huttenlocher about the role of AI in the present, and future of our species, how it will transform our lives, and how we- as humans- need to prepare for the Age of AI.

Q: How significant a leap is AI for us as a species?

[Dan Huttenlocher]: AI has been an area of technology for many decades, but the advances of the past five-years show us why this is one of the major technology events of the last several centuries.

AI perceives the world in ways that are different to humans- that offers tremendous potential, but also presents risks we need to work on, understand and mitigate. We haven’t really had a technology like AI in the history of technological development – the closest analogy would be the movable type printing press, which came to the fore at the beginning of the enlightenment, some five hundred years ago. Civilisation altering technological leaps are very hard to understand before the fact. The movable-type press raised human reason to faith in terms of the ways that people were able to understand the world. Suddenly, people could communicate asynchronously through writing… you didn’t have scribes… you could also scale communication across time and space. Humanity was elevated to a higher plane. Similarly with AI, we’re now elevating a new form of understanding that’s not human reasoning, nor faith. We don’t know quite what it is or will be.

Q: Is AI at a level of novelty, and impact, where society simply cannot go-back?

[Dan Huttenlocher]: New technologies often cause some elements of society to want to put the genie back in the bottle. That really isn’t possible, and we must therefore keep an eye on what level, and type, of regulation is appropriate.

Premature regulation is highly problematic from an international-relations perspective because the places most likely to have a regulatory reaction are Western countries, and we really risk losing the role that our values play in the world if we let other countries develop this technology, which the world will depend on, without us. There’s a delicate balance between making sure our values are encoded in these technologies as they come out- and not constraining them so much that we lose the technological race to other nations who don’t hold our values.

The early days of genomics provide an interesting analogy. Many countries wanted to ban recombinant DNA research – it sounded scary, was not well-understood, but it has gone on to be one of the most impactful advances in medical treatment, saving and improving many lives.

Q: To what extent does network theory help us understand AI?

[Dan Huttenlocher]: Networks have existed long before ‘technology.’ Humans are networking beings. Connecting is very important to our social interactions, to our economics, to everything. The first large scale technological networks were based around transport, the telegraph and telephony.

We’re creatures that seek out one another in groups. From the earliest form of trade to modern globalised trade flows, they’re all based on communication networks, transport networks, financial networks….

The internet-age ushered in a whole new set of information-based networks which didn’t transport or trade in physical goods (for the most part). We now see implicit networks which come out of search (where results are based on behaviours) and- of course- large scale social networks. Our modern-day information networks, particularly social media and search could not operate at scale without AI. The number, and complexity, of decisions that need be made to monitor and administer networks, applying some form of community guidance and standards simply could not be processed without AI. You’re talking about billions of posts and billions of pieces of content every day. You need an automated form of human-level intelligence to make that happen.

While people have been worried about AI being embedded in humanoid robots from the science fiction world, our lives have been shaped and influenced by AI which makes tens of billions of decisions each day about what we see, and how we communicate.

Q: Do AI driven network platforms impact the borders of our species?

[Dan Huttenlocher]: AI enabled network platforms often have participant or user bases that are larger than all but the very biggest countries, with a few being larger still. What happens on those network platforms is the kind of thing that nation states were formed for, and what they still very-much engage in. Think about the controls on freedom of expression, commercial interactions, trade, and so on. The corporate entities who operate these platforms have essentially become players on the global stage, comparable to nation states.

I’m very sceptical about the notion that AI enabled networks will supplant nation states. There are parts of the crypto community who are trying to do this- but we must remember that people depend on the security of local community and physical community for essential things like defence, food, energy and healthcare. Governments at a national and local level are far better positioned to provide these things. We must also remember that human identity is geospatially localised. Network platforms throw people together and allow them to form new groups and identities that may not have been part of the geospatial realm, but they’re not replacements to, they are additions on.

Q: Will AI driven technologies change our approach to security?

[Dan Huttenlocher]: We’re already seeing cyber-attacks on a regular basis, often nation-state backed in the most sophisticated cases. These aren’t going to go away – but AI and machine learning can create cyber-attacks that achieve some human designed objective in ways that we strategically wouldn’t conceive of or expect. It’s really important we give this proper attention as smaller, less resourced groups could command a lot of attention, ability, and enable potentially large disruption. We’ve had 10-15 years of global networks growing, we’re dependent on them, but they’re very fragile and haven’t been designed with the level of dependency we have on them.

Q: How can we, as a species, adapt to AI and the potential for it to fool us?

[Dan Huttenlocher]: AI itself is not trying to fool anybody- it’s always a human being. It’s easy to anthropomorphise what AI does because it’s so sophisticated.

This is a place where cryptographic methods can serve us well such that we can cryptographically verify that something was indeed said by who it appears to have been said by. Think of it like NFTs…. A mechanism by which we could verify and believe that yes, it was Person X who said this, and this mechanism certifies that he or she did. In this scenario, it doesn’t matter how good technologies like GPT3 get, they wouldn’t have the certificate of authenticity. We need freedom of speech and expression for humans, not machines!

Q: How do we remove human beings as the ghost in the machine?

[Dan Huttenlocher]: We have a group here in the Schwarzman College of Computing at MIT who work on the social and ethical responsibilities of computing. They provide educational materials and tools for researchers to use in their work, and have been developing processes and protocols for researchers to interrogate themselves and their work. It’s like the approach you see in the medical world. When you want to run a clinical trial on human subjects, there are protocols you need to go through. We need the same for any large-scale use of machine learning, AI and at scale data.

Importantly, this is not just being developed by computer scientists. We have philosophers and social scientists engaged in the development of protocols for computing R&D, and that is critical.

Some form of auditing of the behaviour of AI systems is extremely important. We don’t trust human beings to do things without being audited, and we’re now getting AI to do those very same things without the same checks and balances.

Q: How will AI transform research?

[Dan Huttenlocher]: We now have plenty of examples of giving machine learning, or AI, some human designed objective function, yielding discoveries even in areas which were previously very heavily studied by humans. Machine learning and AI functions are discovering things that are making experts in their field say, ‘holy cow!.’

Because these systems don’t see the world the way we do, they can extrapolate things in novel and unexpected ways that we haven’t identified. Systems like Deep Mind’s AlphaGo are not beating humans at games through speed and brute force, they’re discovering new ways to play which we never conceived. These systems are also out there detecting cancers, working on treatments, drug discovery and protein folding. Cell discovery has been radically accelerated through collaborations between human researchers and AI.

Q: How will AI impact our humanity?

[Dan Huttenlocher]: The advent of AI is a watershed moment for humanity in the same way Gutenberg’s printing press was. What happened in the century and a half after this invention was a new philosophical understanding of what it means to be human and engage with the world. Before the printing press, it was faith and church who explained the world – and the printing press created a new philosophical discourse. The philosophers who helped us build the understanding, moral and logical principles we now take for granted also had ‘day jobs,’ they were barristers, mathematicians, businesspeople and artists. It was the bringing-together of these different domains that was critical to meaningful, radical change. We need that same multi-disciplinary approach now.

We must build broad understanding and trust for AI. If we don’t, it could become another type of God. We could see an increase in mysticism where people come to believe in AI as an oracle. We cannot allow that to happen