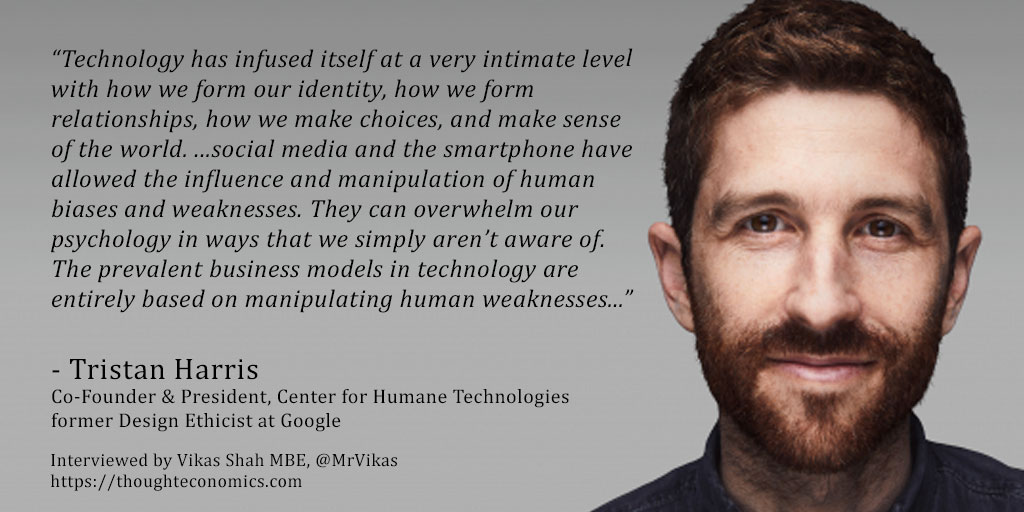

Called the “closest thing Silicon Valley has to a conscience,” by The Atlantic magazine, Tristan Harris spent three years as a Google Design Ethicist developing a framework for how technology should “ethically” steer the thoughts and actions of billions of people from screens. He is now co-founder & president of the Center for Humane Technology, whose mission is to reverse ‘human downgrading’ and re-align technology with humanity. Additionally, he is co-host of the Center for Humane Technology’s Your Undivided Attention podcast with co-founder Aza Raskin.

Tristan has spent more than a decade studying the influences that hijack human thinking and action. From his childhood as a magician to his work in the Stanford Persuasive Technology Lab where he studied persuasive technology, Tristan became concerned about the need for ethical and humane technology.

Marc Benioff, CEO of Salesforce notes that, “Tristan Harris is probably the strongest voice in technology pointing where the industry needs to go. This is a call to arms and everyone needs to hear him now.”

In this exclusive interview I spoke to Tristan Harris about how the technologies of social media and the smartphone are destroying our society, and what we can do to pull it back from the brink.

Q: When did our relationship with technology go wrong?

[Tristan Harris]: There have been plenty of points in history where we’ve had to be conscious of the technology we’ve made and the level of harm it could create. Bio and nuclear weapons are just two examples.

Today, technology has infused itself at a very intimate level with how we form our identity, how we form relationships, how we make choices, and make sense of the world. The technologies of social media and the smartphone have allowed the influence and manipulation of human biases and weaknesses. They can overwhelm our psychology in ways that we simply aren’t aware of. The prevalent business models in technology are entirely based on manipulating human weaknesses through advertising and engagement, through harvesting attention and surveillance capitalism. These models are then amplified through our own instincts for social obligation and reciprocity.

Technology has- intentionally, and through unintended consequences, manipulated human weakness. You can view most of the harms that we are now experiencing as rooted in this: addiction, distraction, mental health issues, depression, isolation, polarisation, conspiracy thinking, deep-fakes, virtual influencers, our inability to know what is and isn’t true….

Our past technologies, such as hammers, didn’t manipulate or influence our weaknesses… they didn’t have a business model dependent on building a voodoo doll of the hammer owner that allowed them to predict the hammer owner’s behaviour, showing them videos of home construction such that mean they use the hammer every day. A hammer is just sitting there patiently waiting to be used.

Technology is systematically undermining human weakness, the costs are huge. This is what degrades our quality of life and how we make sense of the world. This is what destroys our ability to form identity, to form relationships and make choices. This is what stops us from being able to make decisions that can move towards solving climate change and dealing with injustices and inequality.

We have less and less time to make increasingly consequential choices and technology is degrading the quality of sense making that informs the choices we make.

Q: Why are there no checks & balances on social and other technologies that are harming us?

[Tristan Harris]: We are seeing the results of continual negligence here. It’s the accumulation of thousands of seemingly minor decisions to make platforms ‘stickier,’ to drive user engagement, to encourage people to share content. These are all decisions made quickly around the urgency to beat competition and gain market dominance, not what is or is not good for us as a society. The result is that we’re now 10 years into the mass warping of the human collective psyche because of a lack of checks and balances.

To even begin to correct what’s already happened means we have to wake up to the challenge of a world which must collectively wake-up from a mass delusion that has warped our trajectory as a civilisation.

Technology claims to be showing us a mirror of what was already present in society- racism, conspiracy theories- but in reality, technology is a funhouse mirror with a feedback loop that’s engineered to show us the most egregious parts of society… those parts that are better at keeping our attention. The mirror gets more and more warped, but we mistake it for an honest and neutral view of who we are.

Q: How urgent are the issues around how technologies are impacting our minds?

[Tristan Harris]: This is an existentially urgent issue. Many people have said that the only way to change what’s happening is if the state binds the predatory forces of the market and the technology extractive logic of surveillance capitalism. It’s very hard to get the state to bind the market when you don’t have a population or culture that understands the harm that’s being done. We are starting to see a global culture now that is much more aware that we need to check these companies and even governments are beginning to start taking these issues more seriously and tackling them with more urgency.

Q: How are social technologies impacting our national security?

[Tristan Harris]: People talk about foreign interference in elections, but it is actually foreign interference in culture. Our adversaries are coming into our country, deliberately provoking and exacerbating tensions that can drive culture wars and civil war. We’re seeing this across the African Continent, the United States, Europe and in the Global South. Some of this is also happening organically in places like Ethiopia and Myanmar.

After World War 2, the great powers couldn’t launch any direct wars with each other- kinetic war between nuclear nations was practically impossible. The alternative forms of warfare however- narrative warfare, financial warfare, economic warfare- were (and are) still present. More recently the kind of warfare that has become popular is cultural or informational. In this case, you don’t need to attack a country directly, you can use the existing tensions in a society and magnify them to the point where society falls apart. It’s far less expensive.

The US can spend billions on a wall or trillions on a nuclear arsenal, but that won’t protect you if your computer system still uses the default password. We’ve been obsessed with protecting our physical borders, but our digital borders are wide-open, and our society runs in a digital world. If Russia or China try to fly a plane into the United States, they’ll be shut down by a USAF F35 that we spent a trillion-dollars developing. Meanwhile, if they try and fly an information plane into the United States they’re met with Facebook and Google algorithms that run an auction to enable them to get the maximum audience, for the cheapest price. They’re met with a white glove that takes them directly to their target.

Instead of militaries fighting militaries, we now have militaries or military motivated agents that are able to activate civilians in each other’s countries. Recent examples we know from about a year ago, Russia targeted US veteran groups and Vietnam war veteran groups to stoke up anti-government sentiments and make them more sceptical of the government.

This kind of activity is going on constantly, and it’s incredibly dangerous. The biggest issue today is that social media is global national security threat, and one that we’re not even spending billions of dollars to protect against.

Q: How can we balance access to information with the need for trust and truth?

[Tristan Harris]: We often misplace the spotlight on how we need really good access to information or truth rather than the fact that we need trust. Imagine a person having perfect access to libraries with high quality information- that’s the aim- but we’re in a world where people don’t trust information if it doesn’t agree with their world view, irrespective of the provenance and accuracy.

Technology (in particular through the actions foreign actors) has been eroding our capacity to trust anything. This has created a pseudo-hypocrisy where we don’t trust information, but we do trust the core beliefs we hold.

We need a new Manhattan Project to rebuild trust in a world that’s been deliberately attacked at its cultural foundation. This is not about state actors nuking each other’s electricity grids but attacking each other’s trust and cultural infrastructure which is just as critical.

Q: How are the technologies of social media playing a role in global development and how nations form and collapse?

[Tristan Harris]: Imagine if Coca Cola said, ‘well, we *could* take the sugar out of our drink, but how else would we give the world diabetes?’ – we have information diabetes.

Companies like Facebook are creating free basics and free operator solutions which have literally colonised countries with infrastructure. They will do a deal with a telco provider in Kenya, Myanmar or Ethiopia that enables them and the operator to crowd out competitors. When you get a new phone in these countries it comes pre-installed with Facebook and with free access to Facebook. Let’s take Ethiopia as an example; you have 6 major languages, 90 dialects, and now all the critical information being provided to people is user generated content. The largest source of information for many people in local dialects is Facebook. There’s no public library, there’s no civic infrastructure or guardian. You just have people making assertions and pushing their biases and hate through the tools of the attention economy. And that economy rewards outrage and incendiary material.

We have a system that rewards the worst aspects of our information society.

There’s a joke within Facebook that if you want to know which countries will have a genocide in the next couple of years, look at the ones that have Facebook free basics. We grew up with the kind of epistemic inoculation that came from seeing email chain letters and viral content. We grew up learning the ways that people could hack and manipulate us, and that prepared us well, but not for the social media world. I mentioned Ethiopia for a reason, because people are saying this is going to be genocide number 2 for Facebook after Myanmar. You have a situation in which user generated content is becoming the basis for what people believe as true or false; utterly frightening.

The Rohingya Muslim minority in Myanmar didn’t have to be on Facebook to be persecuted by those who were saying go kill the Rohingya on Facebook. The Muslims who were lynched in India didn’t have to be on WhatsApp to be killed due to the fake news being circulated on that platform. People reading this may think ‘well, I’m not on TikTok and I don’t use Youtube or Facebook’ but you still live in a country where your election is decided by those platforms. In Brazil, the entire country uses Facebook, and fake news caused Bolsonaro to be elected – a man who wipes out the lungs of the earth, the Amazon – shortening our timeline on climate change, that affects everybody in the world whether we are on Facebook or not.

Q: What gives you hope for the future?

[Tristan Harris]: We have to recognise that viral, user-generated content is not a safe way to operate the information environment of society. We need a responsible, conscious and humane culture to actually run a bottom-up decentralised information society. That involves changing certain things. We have to recouple power with responsibility.

If I go to a store to buy a kitchen knife, I don’t have to show ID or get a background check. If I buy a gun, at the very least I will need to show ID and get a background check. If I want to buy a cruise missile, I have to go through West Point Academy and become a member of the Joint Chiefs of Staff. We have to increase the level of training, wisdom and ethics commensurate with the power people have.

We have to ask fundamental questions about the safety of a society where anyone can post content which can go viral and be seen by a billion people within 24 hours. How can we create a world where we can use enabling technologies but without creating the fragility we’re seeing that threatens our very social fabric?

This is the problem beneath so many other problems in our society. If you care about inequality, racial justice, climate change… if you care about most of the issues we face, you will see that they depend on what people think about those issues and that’s where social media are causing so many problems. If we can get the world to realise that we can all come together because no-one wants a world where it’s impossible to agree and democracies just fall apart and collapse into themselves.